Expectations of privacy in public space

AR and VR intersect (and often conflict) with expectations of privacy in public space in ways that will only become more salient over time. There is a varied and sustained engagement with the topic in the AR literature, which act as sophisticated surveillance systems. As Mark Pesce notes – “[f]ar less a new beginning than an extension and continuation of the existing and ever-deepening techniques of observation, analysis and feedback, AR mirrorshades offer an unprecedented opportunity to scrutinize user interactions in minute detail” (2020, p. 86).

In current research, there is heavy emphasis on privacy in public, with respect to AR specifically, around wearable and head-mounted technology. Early iterations of wearable head-mounted technology, namely Google Glass, was not adopted by the general public, outside of those already in the tech industry. However, many researchers wrote speculative accounts of Google Glass (Brinkman, 2013; Kostios, 2015; Wolf et al., 2018).

Others focused on questions of who and what is surveilled, with specific focus on privacy issues for people other than the users of the technology and things in an environment (see de Guzman et al., 2019; Dainow, 2014). Wolf et al. (2018), for instance, encourage us to move away from a consideration of AR as a visual medium in their discussion of privacy. They suggest we instead focus on other forms of information that are captured by AR devices, such as voice and sound that may be present in an environment, which is currently overlooked in legislation and AR privacy discussions.

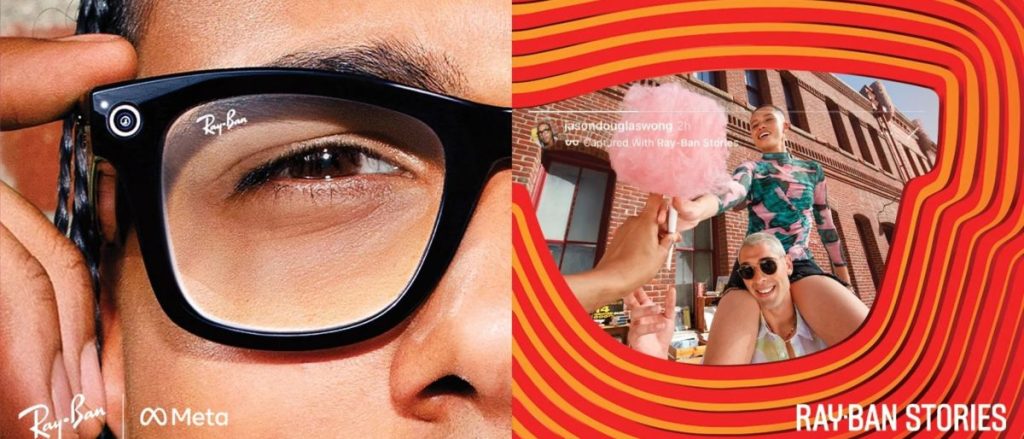

These concerns have once again become timely, with the introduction of the Ray-Ban Stories, produced by Meta. Stories are a form of wearable technology that can take photos and record short videos with audio. The glasses have a small red light to indicate that they are taking video or pictures. The release of Ray-Ban Stories was met with serious criticism, around privacy and surveillance, often from groups that Meta had ‘consulted’ with (Egliston and Carter 2022).

It is very easy to conceal the privacy light with a small piece of black tape (Notopoulos 2021). However, this tape is hardly necessary, as Joanna Stern reports from her time using Stories, many people did not know that they were being recorded until she told them, despite the fact that she did nothing to obscure the light that was meant to indicate that the Ray-Ban Stories were in use (Stern 2021). Furthermore, this is just the beginning of what Meta envisages for Ray-Ban Stories – they are reportedly exploring adding facial recognition software (Mac 2021) and image recognition technology (Egliston and Carter 2022) to the glasses.

Other work (Mann, 2013; Mann and Ferenbok, 2013; Denning et al., 2014) touches on the potential for AR to foster an environment where everyone can surveil – terming this ‘sousveillance’. Presenting an optimistic outlook, Mann and Ferenbok (2013) suggest that this sousveillance represents a kind of political challenge to hierarchical, top-down surveillance by the powerful, and facilitating a ‘surveillance from below’ (giving the example of recording the police as an accountability measure. 2013, p.20).

The theme of privacy in public spaces with respect to AR was also key in legal perspectives. Wassom (2014) points out gaps in UK based legal regulation around AR – including privacy – and Blodgett-Ford and Supponen (2018) highlight some of the US legal issues present in advertising via AR (and VR), such as in use of biometric and geographic data collection for advertising. Meese (2014) focuses on the legal blind spots in Australian privacy law in regulating widespread AR technologies (noting issues specific to Australia, such as a lack of regulatory or constitutional privacy protections as seen in Europe and the US respectively). Regulatory power is central to privacy in both AR and VR, as there is little input from corporations in this area. Upon the release of Ray-Ban Stories, Meta only has a short list of privacy guidelines for user’s to respect bystander privacy, with advice such as: always let people know when you are recording by using obvious gestures; obey the law and do not harass people; take your glasses off in private spaces such as locker rooms; and respect people’s preferences for being recorded (Meta 2022). This places the onus on the consumer to uphold the privacy of those around them.

Prior research also has an emphasis on AR, public space and expectations to seclude oneself from others and particular forms of information. Kostios (2015) gives the example of users in public spaces projecting AR images onto private property, also discussed by Blitz (2018) as a form of ‘personalisation of space’, in the context of US constitutional law. There were also concerns about AR and the projection of harmful material (see Lemley and Volokh, 2018). Pesce (2017) gives the particularly striking example of AR’s weaponization as a tool for public hate speech. He writes:

“What if that blank canvas gets painted with hate speech? What if, perchance, the homes of ‘undesirables’ are singled out with graffiti that only bad actors can see? What happens when every gathering place for any oppressed community gets invisibly ‘tagged’? In short, what happens when bad actors use Facebook’s augmented reality to amplify their own capacity to act badly?” (2017, n.p.).

Robertson (2019) identifies some of these same concerns around the case of Mark AR – a mobile application allowing the creation and placement of persistent digital images in real world environments. She notes that the developers of the application have actively had to incorporate features to minimise the potential for AR’s weaponization, such as requiring real names and the need for active human moderation. Further, safety apps, such as Safetipin, use user ratings of the ‘safety’ of certain locations that then determine how police patrol certain areas, which has some troubling implications for who gets to determine what is ‘safe’ and what type of areas are deemed ‘unsafe’ (often those that are poor, majority POC neighbourhoods, sex workers, drug users etc.), which then creates over-policing of these areas (Le 2022). As AR technology gets more sophisticated, we need to take stock of how we are digitally inscribing physical spaces, and what it means for the communities that gather there (for a more detailed look about the politics of space, see here),

“As AR technology gets more sophisticated, we need to take stock of how we are digitally inscribing physical spaces, and what it means for the communities that gather there.”

In contrast, while there is concern around expectations of privacy in public, a number of AR art practitioners have shown the expressive and activist potential of augmented public space. Skwarek, for instance, creates a virtually rendered elimination of the border between Israel and Palestine at the Gaza Strip (see Skwarek, 2018). Others have employed AR for subversive cultural commentary. Katz (2018) discusses the use of AR by artists to overlay artworks at the New York Museum of Modern Art with images or text (making artworks unrecognisable) – the goal of which being to challenge the authority of high art as something often produced by individuals with certain social and class interests. While these examples do not ‘lessen’ the issues associated with AR as invasive, it does show that this can at least be done for expressive or purposeful ends.

Discussions about the public use of VR – and its intersections with feelings and expectations of privacy – were relatively limited. In an account of the use of VR in art gallery spaces, Parker and Saker (2020) outline the qualitative experience of this increasingly popular ‘public’ use of VR. Inspired by Henri Lefebvre’s account of spatiality, Parker and Saker understand the art museum as both spatial and social – a dynamic that VR-based experiences alters. As they point out, through interviews with gallerygoers, VR created feelings of ‘freedom’, inasmuch that their view of the virtual space was not visible to others – providing a “mastery of space and autonomy that is rare in a crowded museum” (2020, p.10). Conversely, their participants describe feelings of vulnerability – particularly in being watched using the technology, which we also found in our research into the use of VR videos in the zoo (Carter et al., under review). As scholars like Golding (2019) discuss elsewhere, VR is a medium that is imagined largely around the performance of an embodied spectacle, through the user making a range of bodily gestures corresponding to movements on the screen. Museums – as social, public spaces – are inherently characterised by a dynamic of watching others, something that Parker and Saker’s (2020) participants also felt to be intensified through VR, where the user’s bodily performance of VR became part of the museum experience. While Parker and Saker do not engage with ethics, what they underline here is the ways ‘private’ VR in public spaces still presents challenges in the context of existing expectations of privacy in public space.

References

Blitz, M. (2018). Augmented and virtual reality, freedom of expression, and the personalization of public space. In Barfield, W. & Blitz, J.M. (Eds), Research handbook on the law of virtual and augmented reality (pp. 304–339). Cheltenham, UK: Edward Elgar Publishing.

Blodgett-Ford, S.J. & Supponen, M. (2018). Data privacy legal issues in virtual and augmented reality advertising. In Barfield, W. & Blitz, J.M. (Eds), Research handbook on the law of virtual and augmented reality (pp. 471–512). Cheltenham, UK: Edward Elgar Publishing.

Brinkman, B. (2014). Ethics and pervasive augmented reality: Some challenges and approaches. In Pimple, K.D. (Ed.), Emerging pervasive information and communication technologies: Ethical challenges, opportunities and safeguards (pp. 149-175). Dordrecht, Netherlands: Springer.

Dainow, B. (2014). Ethics in emerging technology. ITNOW, 56(3), 16–18.

de Guzman, J., Thilakarathna, K. & Seneviratne, A. (2019). Security and privacy approaches in mixed reality: A literature survey. ACM Computing surveys, 52(6), 1–37.

Denning, T., Dehlawi, Z. & Kohno, T. (2014). In situ with bystanders of augmented reality glasses: perspectives on recording and privacy-mediating technologies. CHI ’14:

Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 2377–2386). New York, NY: ACM.

Egliston, B., & Carter, M. (2022). ‘The metaverse and how we’ll build it’: The political economy of Meta’s Reality Labs. New Media & Society, 0(0). https://doi.org/10.1177/14614448221119785

Golding, D. (2019). Far from paradise: The body, the apparatus and the image of contemporary virtual reality. Convergence, 25(2), 340-353.

Katz, M. (2018). Augmented reality is transforming museums. Retrieved from https://www.wired.com/story/augmented-reality-art-museums/

Kostios, A. (2015). Privacy in an augmented reality. International journal of law and information technology, 23(2), 157–185.

Le, T (2022). When it comes to sexual assault, safety apps aren’t the answer. Monash Lens. Retrieved from https://lens.monash.edu/@trang-le/2022/01/24/1384219/when-it-comes-to-sexual-assault-safety-apps-arent-the-answer.

Lemley, M.A. & Volokh, E. (2018). Law, virtual reality, and augmented reality. University of Pennsylvania Law Review, 166(5), 1051–1138.

Mac, R. (2021). Facebook is considering facial recognition for its upcoming smart glasses. Buzzfeed News. Retrieved from https://www.buzzfeednews.com/article/ryanmac/facebook-considers-facial-recognition-smart-glasses.

Mann, S. & Ferenbok, J. (2013). New media and the power politics of sousveillance in a surveillance-dominated world. Surveillance and Society, 11(1/2), 18–34.

Mann, S. (2013). Veillance and reciprocal transparency: Surveillance versus sousveillance, AR Glass, lifelogging, and wearable computing. In 2013 IEEE International Symposium on Technology and Society (pp.1–12). New York, NY: IEEE.

Meese, J. (2014). Google Glass and Australian privacy law: Regulating the future of locative media. In Wilken, R., & Goggin, G. (eds), Locative Media (pp. 136–147). New York NY: Routledge.

Meta (2022). Ray-Ban Stories: Privacy. Meta. Retrieved from: https://about.meta.com/reality-labs/ray-ban-stories/privacy.

Notopoulos, K (2021). Facebook is making camera glasses, haha oh no. Buzzfeed News. Retrieved from https://www.buzzfeednews.com/article/katienotopoulos/facebook-is-making-camera-glasses-ha-ha-oh-no.

Parker, E., & Saker, M. (2020). Art museums and the incorporation of virtual reality: Examining the impact of VR on spatial and social norms. Convergence, 26(5-6), 1159-1173.

Pesce, M. (2017). The last days of reality. Meanjin. Retrieved from https://meanjin.com.au/essays/the-last-days-of-reality/

Pesce, M. (2020). Augmented Reality: Unboxing Tech’s Next Big Thing. John Wiley & Sons.

Robertson, A. (2019). Is the world ready for virtual graffiti? The Verge. Retrieved from https://www.theverge.com/2019/10/12/20908824/mark-ar-google-cloud-anchors-social-art-platform-harassment-moderation

Skwarek, M. (2018). Augmented reality activism. In Geroimenko, V. (Ed.), Augmented Reality Art: From an Emerging Technology to a Novel Creative Medium (pp. 3–40). Dordrecht, Netherlands: Springer.

Stern, J (2021). Smart Glasses by Facebook and Ray-Ban Mix Cool and Creepy. Wall Street Journal. Retrieved from https://www.wsj.com/articles/rayban-stories-facebook-review-11631193687

Wassom, B. (2014). Augmented reality law, privacy, and ethics: Law, society, and emerging AR technologies. Waltham, MA: Syngress.

Wolf, K., Marky, K. & Funk, M. (2018) We should start thinking about Privacy Implications of Sonic Input in Everyday Augmented Reality! In Mensch und Computer 2018 – Workshopband. Gesellschaft für Informatik e.V., Bonn, 353–359. DOI: http://dx.doi.org/10.18420/muc2018-ws07-046

Recommended citation

Carter, M, Egliston, B, and Clark, K.E. (November, 2022) Expectations of privacy in public space. Critical Augmented and Virtual Reality Researchers Network (CAVRN). https://cavrn.org/expectations-of-privacy-in-public-space/

An earlier version of this literature review was published for the Socio-Tech Futures Lab in 2020. This version has been updated to include more recent literature.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.